Evaluation

Algorithms and implementations involved in the benchmark

| JWordnetSim (Lin, JCn) | Java implementation of WordNet measures. As Information content files we used the ones available within the WordNet::Similarity project. The JWordnetSim library was used in conjunction with WordNet 2.0, used version 1.0.0 |

| JWSL (Pirro&Seco, Resnik, Lin, JCn) | Supports additional similarity measures compared to JWordnetSim. It also uses the intrinsic information content, which is computed directly from WordNet. The library is not freely available, but it is provided by the authors upon written request. |

| WikipediaMiner (WLM) | This toolkit is an official implementation of the Wikipedia Link Measure. It was used with Wikipedia snapshot from November 2013. |

| ESAlib (WLM) | ESA implementation with Wikipedia snapshot from 2005. |

| Word2vec (NNLM) | Skip gram with negative sampling trained on English Wikipedia using the word2vec software. The pretrained wordvectors were retrieved via the word2vec homepage for the Google News corpus and from Levy Omer's webpage (Wikipedia corpus). Both resources use 300 dimensions. |

| Bag of Words on whole document context (BOW) | Own implementation using English Wikipedia dump from 2011. Disambiguation: Wikipedia Search or manual disambiguation. Preprocessing: stop-words were removed, terms are sorted according to term frequency and N most frequently occurring terms are kept (we used N=10000). Term weighting: TF-IDF, where TF refers to the term frequency of a word in the article, and IDF to inverse document frequency computed from the entire Wikipedia. Similarity function: cosine similarity. |

Results for WordNet measures - JWNL Library

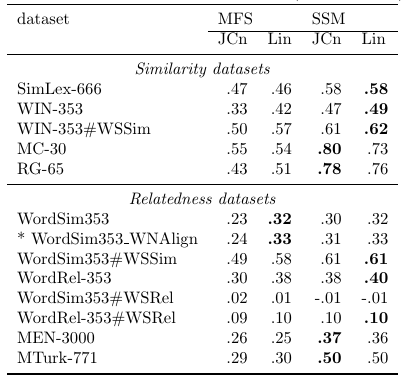

It is often the case that a word matches multiple synsets. Both JWordnetSim and JWSL libraries offer two ways to deal with this situation. It is either possible to select the first sense for given word, which corresponds to the Most Frequent Sense (MFS) option. The second option is to let the library return the similarity maximizing combination of senses. We call this Synset Similarity Maximization (SSM).

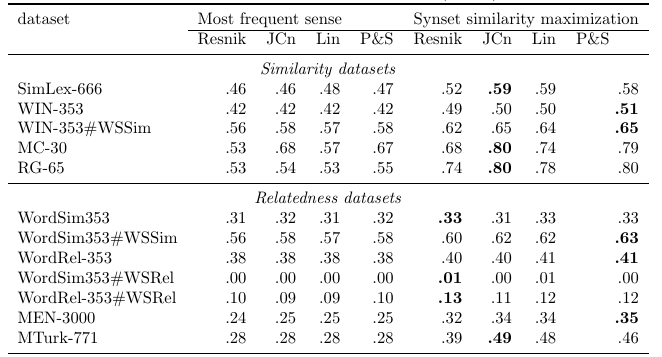

Results for WordNet measures - JWSL Library

Results for Wikipedia algorithms

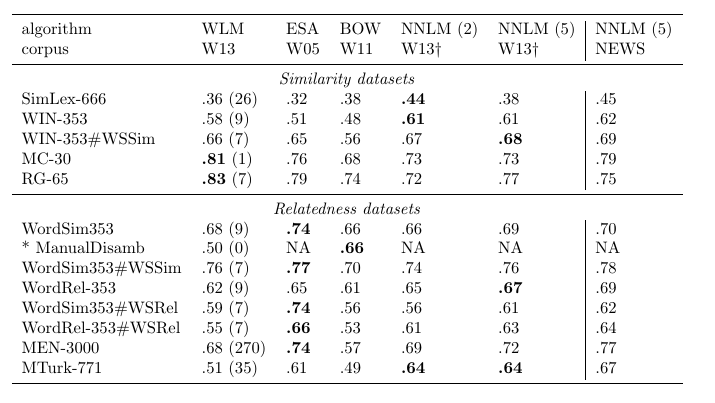

Results for distributional measures. For the WLM measure, we list in the parentheses number of words that were not disambiguated or recognized. For NNLM the window size is listed in the parentheses. W succeeded by number indicates the year of the Wikipedia snapshot used as corpus. Dagger symbol - corpus was obtained via personal communication. NEWS -- trained on Google News corpus (100 billion words). The highest result on Wikipedia is listed in bold.

Overall results

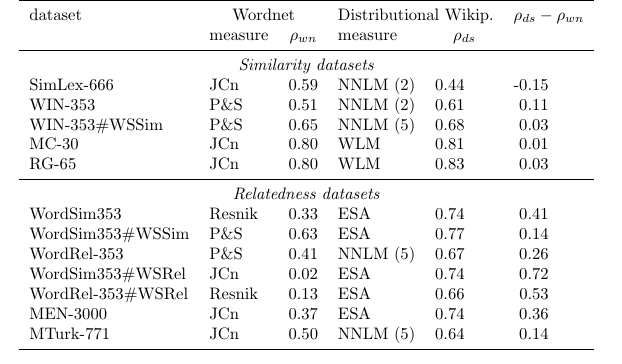

This table compares the best result obtained by WordNet and Wikipedia measures on each of the datasets.